What hairnet to this young man is unfortunate, and I know the mother is grieving, but the chatbots did not kill her son. Her negligence around the firearm is more to blame, honestly. Regardless, he was unwell, and this was likely going to surface in one way or another. With more time for therapy and no access to a firearm, he may have been here with us today. I do agree, though, that sexual/romantic chatbots are not for minors. They are for adult weirdos.

That’s a good point, but there’s more to this story than a gunshot.

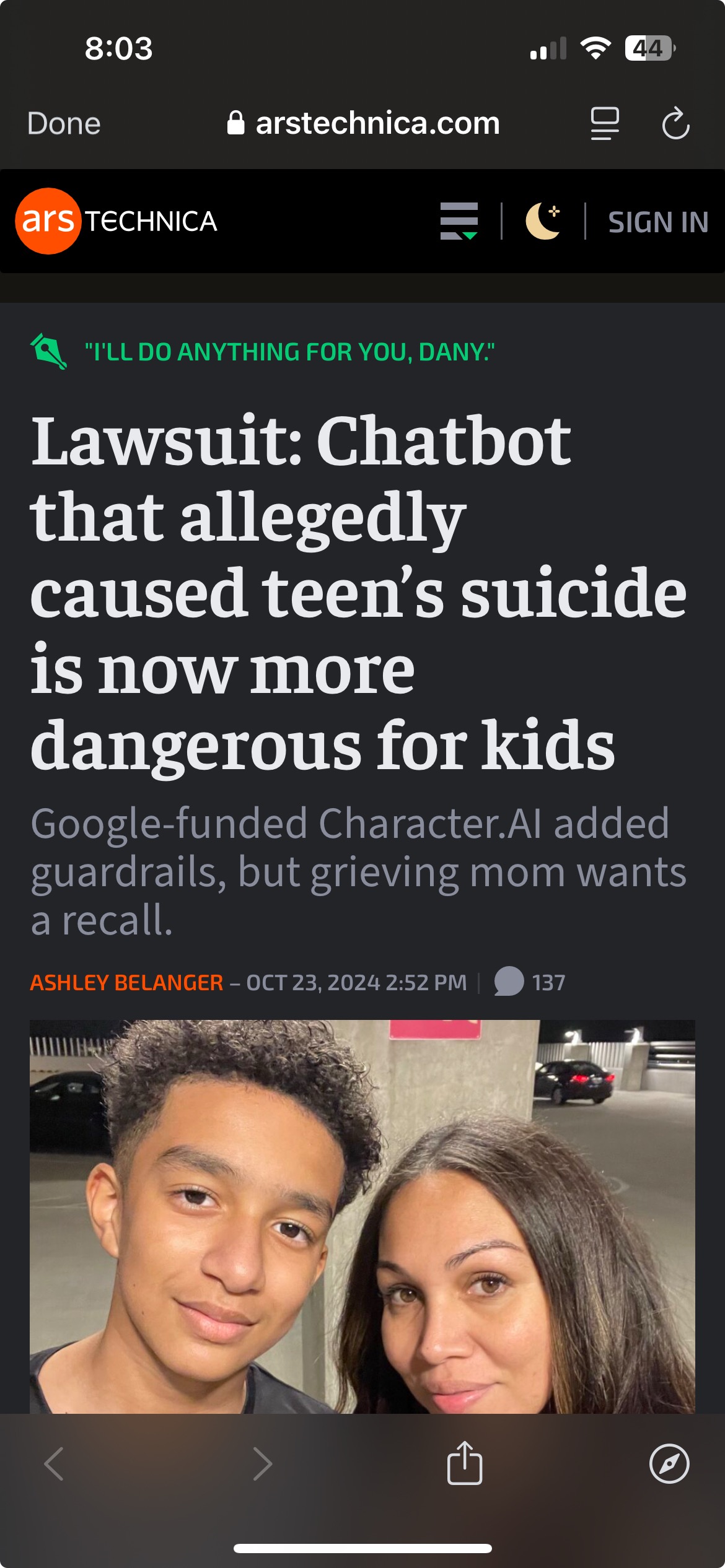

The lawsuit alleges amongst other things this the chatbots are posing are licensed therapist, as real persons, and caused a minor to suffer mental anguish.

A court may consider these accusations and whether the company has any responsibility on everything that happened up to the child’s death, regarless of whether they find the company responsible for the death itself or not.

The bots pose as whatever the creator wants them to pose at. People can create character cards for various platforms such as this one and the LLM with try to behave according to the contextualized description of their provided character card. Some people create “therapists” and so the LLM will write like they’re a therapist. And unless the character card specifically says that they’re a chatbot / LLM / computer / “AI” / whatever they won’t say otherwise, because they don’t have any sort of self awareness of what they actually are, they just do text prediction based on the input they’ve been fed (though. It’s not really character.ai or any other LLM service or creator can really change, because this is fundamentally how LLMs work.

This is why these people ask, among other things, to strictly limit access to adults.

LLM are good with language and can be very convincing characters, especially to children and teenagers, who don’t fully understand how these things work, and who are more vulnerable emotionally.

They are for adult weirdos.

Where do I sign up?

If she’s not running on your hardware, she only loves your for money.

Why does a suicidal 14 year old have access to a gun?

America

Anyone else think it is super weird how exposing kids to violence is super normalized but parents freak out over nipples?

I feel like if anything should be taboo it should be violence.

Agreed. People wouldn’t be able to shoot themselves if their hands are constantly masturbating. It’s kept me alive this far.

To be fair, they mention that the chats were also “hypersexualized” - but of course not without mention that the bots would be basically pedos if they’d be actually real adult humans. lol

Nudity=sex and sex is worse than violence there.

Camera got them images

Camera got them all

Nothing’s shocking…

Showed me everybody

Naked and disfigured

Nothing’s shocking…

This is why we gotta ban TikTok!!! \s

As someone who is very lonely, chatbots like these scare the shit out of me, not only for their seeming lack of limits, but also for the fact that you have to pay for access.

I know I have a bit of an addictive personality, and know that this kind of system could completely ruin me.

Yeah, it’s not good if it’s profit driven, all kinds of shady McFuckery can arise from that.

Maybe local chat bots will become a bit more accessible in time.

You could run your own if you have the hardware for it (most upper mid-range gaming pc will do, 6gb+ gpu)

Then something like KoboldAI + SillyTavern would work well

There’s plenty of free ways to use LLMs, including having the models run locally on your computer instead of an online service, which vary greatly in quality and privacy. There’s some limited free ones too, but imo they’re all shit and extremely stupid, in the literal sense - you get even better results with a small model on your computer. They can be fun, especially if they work well, but the magic kinda goes away when you understand more how they actually work, which also makes all the little “mistakes” of them very obvious and that kind of kills the immersion and with that the fun of it.

A good chat can indeed feel pretty good if you’re lonely, but you kinda have to understand that they are not real, and that goes not just for potentially bad chats, but even for the good ones. An LLM is not a replacement for real people, nothing an LLM outputs is real. And yes, if you have issues with addictions, then you may want to keep your distance. I remember how people got addicted to regular chat rooms back in the early days of the world wide web, now imagine those people with a machine that can roleplay any scenario you want to play with it. If you don’t know your limits then that can be very bad indeed, even outside of taking them too seriously.

I can generally only advice to just not take them seriously. They’re tools for entertainment, toys. Nothing more, nothing less.

caused

Hmmm

If a HumanA pushed and convinced HumanB to kill themselves, then HumanA caused it. IMO they murdered them. It doesn’t matter if they didn’t pull the trigger. I don’t care what the legal definitions say.

If a chatbot did the same thing, it’s no different. Except in this case, it’s a team of developers behind it that did so, that allowed it to do so. Character.ai has blood on their hands, should be completely dismantled, and every single person at that company tried for manslaughter.

Except character.ai didn’t explicitly push or convince him to commit suicide. When he explicitly mentioned suicide, it made efforts to dissuade him and showed concern. When it supposedly encouraged him, it was in the context of a roleplay in which it said “please do” in response to him “coming home,” which GPT3.5 doesn’t have the context or reasoning abilities to recognize as a euphemism for suicide when the character it’s roleplaying is dead and the user alive

Regardless, it’s a tool designed for roleplay. It doesn’t work if it breaks character

Your comment might cause me to do something. You’re responsible. I don’t care what the legal definitions say.

If we don’t care about legal definitions, then how do we know you didn’t cause all this?

That will show that pesky receptionist

A very poor Lemmy article headline. The linked article says “alleged” and clearly there were multiple factors involved.

The title is straight from the article

That is odd. It’s not what I see:

Could be different headlines for different regions?

Or they changed the headline and due to caches CDNs or other reasons you didn’t get the newer one.

archive.today has your original headline cached.

Thanks for posting. While it’s a needlessly provocative headline, if that’s what the article headline was, then that is what the Lemmy one should be.

They most likely changed the headline because the original headline was so bad.

If people are still seeing old headline, it’s probably cached. Try a hard refresh or a different browser or a private browser, etc.

This makes me so nervous about how AI is going to influence children and adolescents of the coming generations. From iPad kids to AI teens. They’ll be at a huge risk of dissociation from reality.

One or more parents in denial that there’s anything wrong with their kids and/or the idea they need to take gun storage seriously? That’s the first thing that comes to mind, and it’s not uncommon in the US. Especially when you consider that a lot of gun rhetoric revolves around self defense in an emergency/home invasion, not having at least one gun readily available defeats the main purpose in their minds.

edit: meant to respond to [email protected]

As a European I am astonished, that the article never mentions, or even questions, why this child had access to a loaded firearm.

The chatbot might be a horrible mess and shouldn’t be accessible by children, but a gun should be even less accessible to a child.

Ideally, I agree wholeheartedly. American gun culture multiplies the damage of every other issue we have by a lot

Hence why I consider articles like this part of the “AI” hysteria. They completely gloss over this fact, only mention it once at the beginning, with no further details where the gun came from and rather shove the blame to the LLM.

Bro it’s an AI. It doesn’t have any reasoning skills it’s just stringing words together.

Man, what a complex problem with no easy answers. No sarcasm, it’s a hard thing. On one hand these guys made a chat platform where you could have fun chatting with your dream characters, and honestly I think that’s fun - but I also know llms pretty well now and can start seeing the tears pretty quickly. It’s fun, but not addictive for me.

That doesn’t mean it isn’t for others though. Here we have a young impressionable lonely teen who was reaching out and used the system for something it was not meant to do. Not blaming, but honestly it just wasn’t meant to be a full time replacement for human contact. The LLM follows your conversation, if someone is having dark thoughts the conversation will pick up on those notes and dive into them, that’s just how llms behave.

They say their adding guard rails, but it’s really not that easy with llms to just “not have dark thoughts”. It was trained on reddit, it’s going to behave negativity.

I don’t know the answer. It’s complicated. I don’t think they planned on this happening, but it has and it will continue.

You’ve called? /J

The issue with LLMs is that they say what’s expected of them based on the context they’ve been fed on. If you’re opening up your vulnerabilities to an LLM, it can act in all kinds of ways, but once they’re sort of set on a course they don’t really sway away from it unless you force it to. If you don’t know how they work and how to do that, or maybe you’re self loathing to a point where you don’t want to, it will kick you further while you’re already down. As a user you kinda gaslight them into whatever behavior you want from them, and then they just follow along with that. I can definitely see how that can be dangerous for those who are already in a dark place, even more so if they maybe don’t understand the concept behind them, taking the output more serious than they should.

Unfortunately, various guards & safety measures tend to just censor LLMs to the point of becoming unusable, which drives people away from them towards those that are uncensored - and with them, everything goes, which again, requires enough knowledge and foresight to use them.

I can only advise to not take LLMs seriously. Treat them as a toy, as entertainment. They can be fun, stupid, vile, which also can be fun depending on your mindset… Just never let the output get to you on a personal level. Don’t use them for mental health or whatever either. No matter how good you may write them, no matter how well some chats may go, they’re not a replacement for a real therapy, just like they’re no replacement for a real friendship, or a real romantic relationship, or a real family.

THAT BEING SAID… I’m a little suspicious of the shown chat log. The suicide question seems to come very out of the blue and those bots tend to follow their contextualized settings very well. I doubt they’d bring that up without previous context from the chat, or maybe even this was a manual edit, which I’d assume is something character.ai supports - someone correct me if I’m wrong though. I wouldn’t be surprised if he added that line himself, already being suicidal, to have the chat steer towards that direction and force certain reactions out of the bot. I say this because those bots are usually not very creative in steering away from their existing context, like their character description and the previous chat log, making edits like this sometimes necessary to have them snap out of it.

The entire article also completely glosses over a very important part here: WHERE DID THE KID GET THE GUN FROM?! It’s like two pages long and only mentions that he shot himself at the beginning, with no further mention of it afterwards. Why did he have a gun? How did he get it? Was it his mother’s gun? Then why was it not locked away? This article seems to seek the fault with the LLM, rather than the parents who somehow failed to handle the situation of their sons mental health issues and somehow failed to oversee a gun in a household, or the country who failed to regulate its firearms properly.

I do agree that especially “AI” advertisement is very predatory though. I’ve seen some of those ads, specifically luring you with their “AI girlfriends”, which is definitely preying on lonely people, which are likely to have mental health issues already.

Agree with everything you said, they’re here, how we deal with them is the question going forward. Huge +1000 to how did he get the gun? Why was that the go-to approach there. If you have a teen who you know is going through a mental health crisis, first step is to remove weapons from the house.

My understanding is that users can edit the chat themselves.

I don’t use c.ai myself, but my wife was able to get a chat log with the bot telling her to end herself pretty easily. The follow-up to the conversation was the bot trying to salvage itself after the sabotage by calling the message a joke.

Are we clear as we examine this occurrence that there are a series of steps which must be chosen, and a series of interdependent cogent actions which must be taken, in order to accomplish a multi-step task and produce objectives, interventions and goal achievement, even once?

While I am confident that there are abyssaly serious issues with Google and Alphabet; with their incorporated architecture and function, with their relationships, with their awareness, lack of awareness, ability to function, and inability to function aligned with healthy human considerations, ‘they’ are an entity, and a lifeless, perhaps zombie-like and/or ‘undead’ conclave/hive phenomenon created by human co-operation in teams to produce these natural and logical consequences by prioritization, resource delegation and lack of informed sound judgment.

Without actual, direct accountability, responsibility, conscience, morals, ethics, empathy, lived experience, comprehension; without uninsulated, direct, unbuffered accessibility, communication, openness and transparency, are ‘they’ not actually the existing, functioning agentic monstrosity that their products and services now conjure into ‘service’ and action through inanimate objects (and perhaps unhealthy fantasy/imagination), resource acquisition, and something akin to predation or consumption of domesticated, sensitized (or desensitized), uninformed consumer cathexis and catharsis?

It is no measure of health to be well-adjusted to a profoundly sick incorporation.